Machine learning for QCD

We are living in the midst of the era of computation – and no advance has been as striking as that in machine learning. From natural language processing to image generation, machine learning models have become astonishly adept at tasks that were once thought to be unique to humans. These developments have led to an exponentially growing range of technological applications, from revolutionizing software development to powering self-driving cars. They have also propelled an increasing number of scientific advancements, from predicting the structure of proteins to identifying rare collision topologies at the Large Hadron Collider (LHC). Below, I highlight two areas where I have applied machine learning and statistics in pursuit of understanding emergent properties of the strong nuclear force.

Jet classification and generative design

In high energy particle colliders, hundreds or even thousands of particles are created in a given collision event. Encoded in the correlations between these particles are hints about the underlying physical laws of nature, such as whether new “Beyond the Standard Model” particles exist. The LHC has recorded trillions of independent and identically distributed collisions, providing a rich, complex data set available for analysis.

Machine learning offers an opportunity to elucidate new insights from this data set in two ways: it can classify different types of events, or it can aid in the design of which subsets of events to study in greater detail. Unlike many applications, however, we do not merely want to group the events, but rather we want to compare experimental measurements to theoretical calculations – interpretability and uncertainty quantification are therefore of paramount importance.

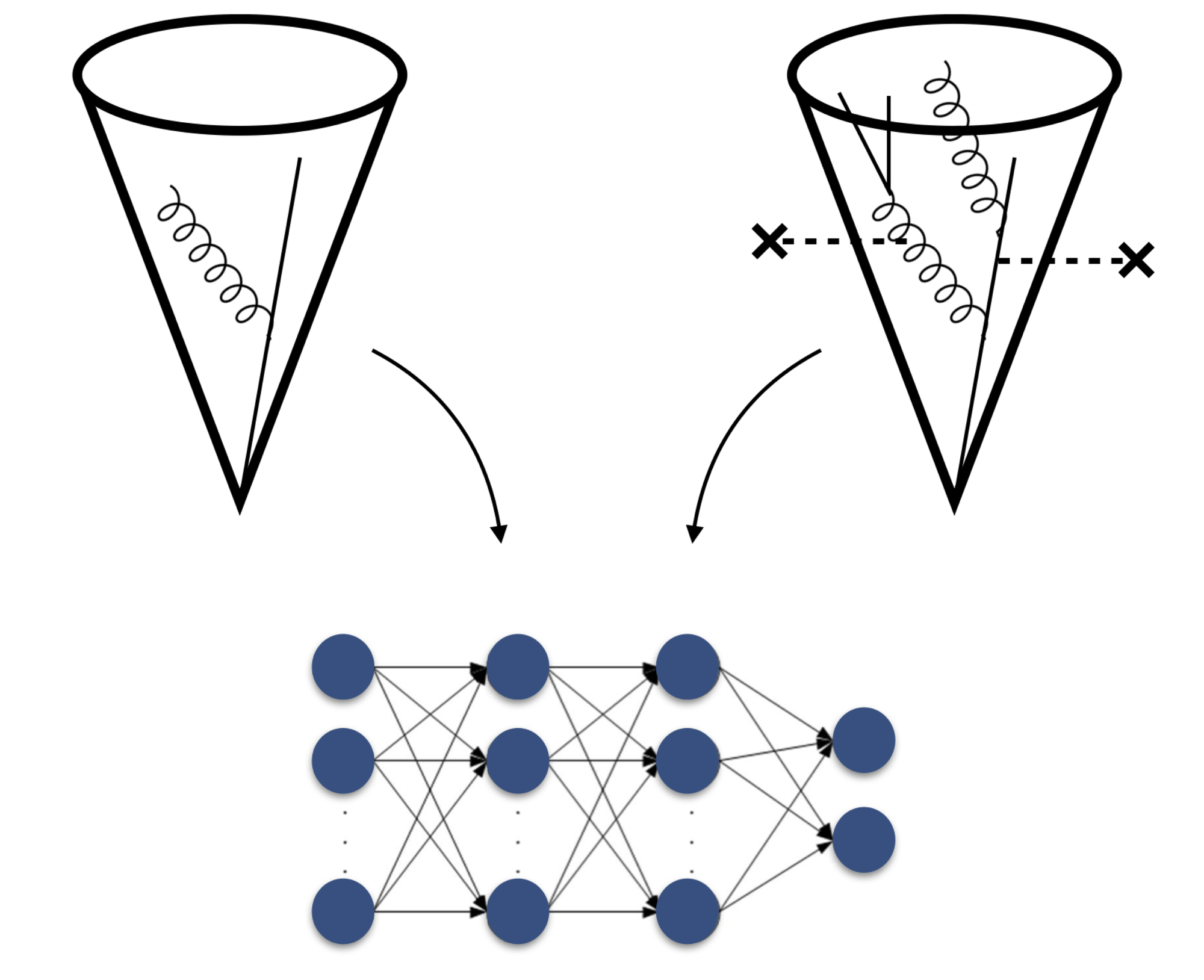

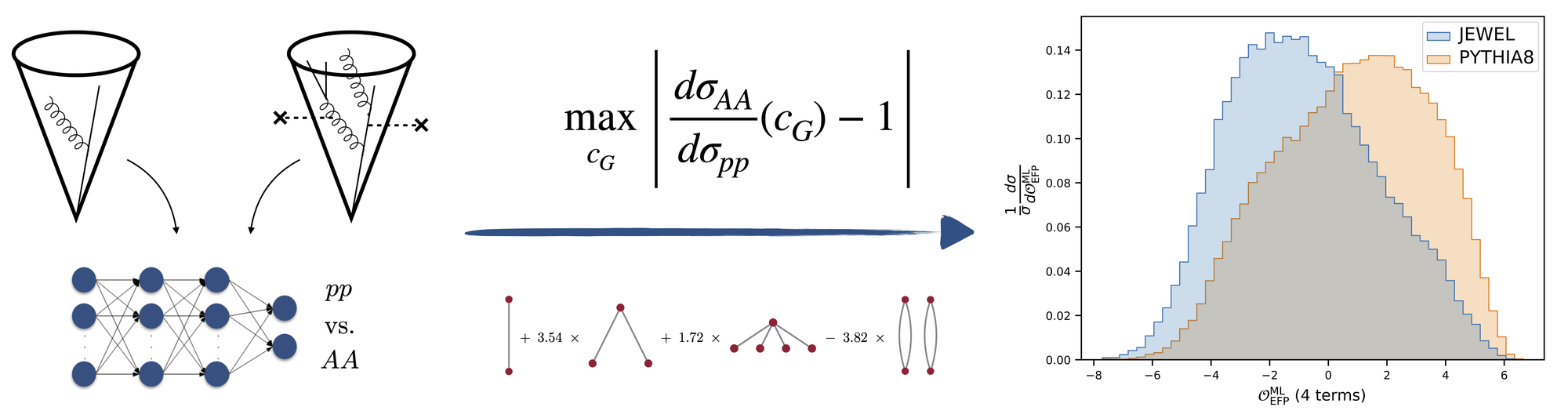

One of the key elements of analyzing a set of collision events is jet classification (see the previous post on jet measurements at the LHC). For example, in order to search for new particles it is important to distinguish jets arising from the fragmentation of a gluon as compared to jets arising from the decay of a W boson. This type of task is the domain of supervised learning: there are two specific classes of jets we would like to separate. In my research, I have developed novel applications to classify jets in proton-proton collisions as compared to jets in heavy-ion collisions [1] as well as jets of different topologies [2] or quark flavors [3]. To do this, I employed permutation-invariant neural networks such as graph neural networks, architectures well-suited to jet classification since collision events are naturally represented as point clouds or graphs.

In addition to classifying jets, machine learning can be used for creative discovery. To do this, we can go one step beyond classification and try to identify the important features that distinguish the classes of interest. Understanding the salient features in turn allows us to design observables using a method known as symbolic regression to generate mathematical expressions that maximize the discriminating power between the classes. Unlike the full collision events, these mathematical observables can be calculated in theoretical particle physics – that is, we use machine learning to guide our theoretical focus. With this idea, I designed maximally informative observables that are analytically calculable, proposing new measurements at the LHC [1] as well as at the future Electron-Ion Collider [3].

Bayesian inference and Gaussian process emulators

The culmination of the scientific process is the comparison of theoretical predictions with experimental data. This has been, however, a notoriously difficult challenge in the study of the quark-gluon plasma. Since the discovery of the quark-gluon plasma in the 2000s, the field has sought to understand its emergent microscopic properties in a precise, quantitative way.

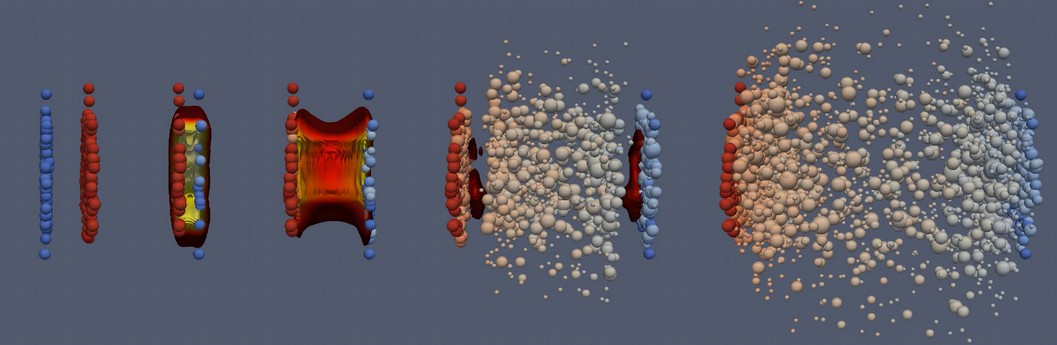

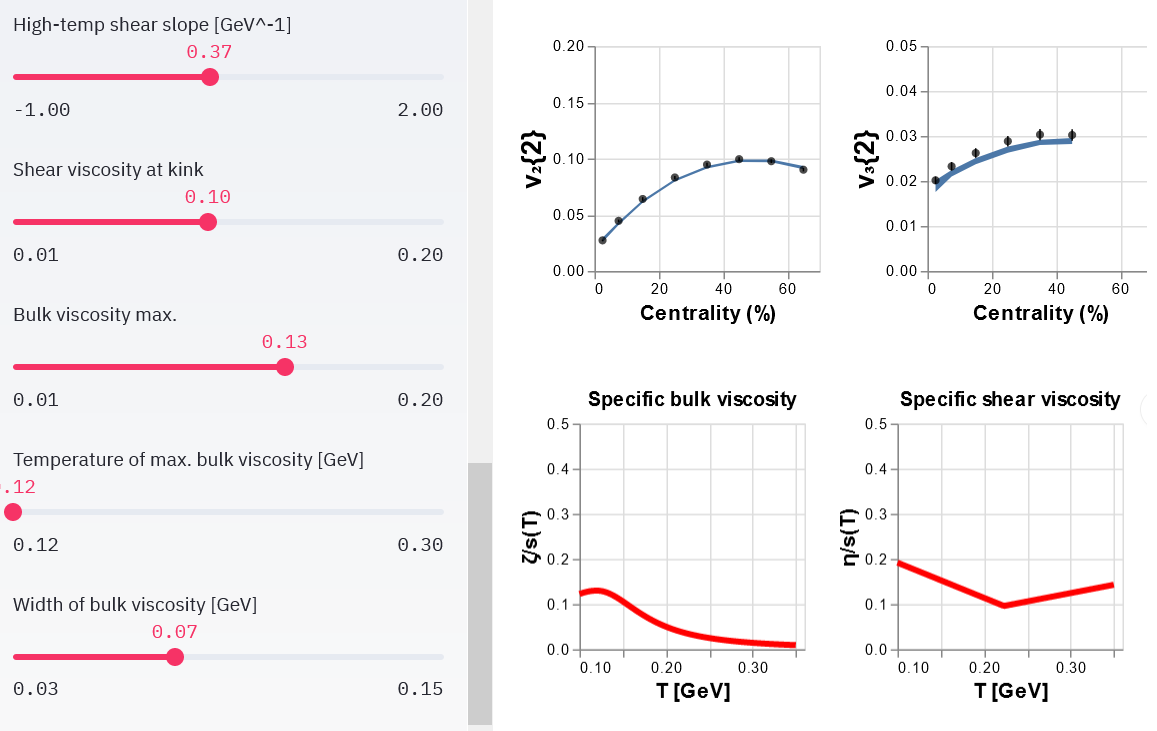

In order to do this, we can confront models of heavy-ion collisions – in which a droplet of quark-gluon plasma is briefly formed – with experimental measurements. Together with experimental and theoretical colleagues, I pursued this effort in the JETSCAPE collaboration. We have produced an open-source, modular framework to simulate heavy-ion collisions and constrain the model parameters with experimental data using Bayesian parameter estimation. This process requires large-scale data analysis, since the simulated model is computationally expensive. To address this, we make use of Gaussian Process Emulators as non-parametric interpolators of our physics model with well-defined uncertainties.

My work focuses on performing such global analyses on the “hard sector” – those observables involving large momentum exchanges. I co-led a proof-of-principle extraction of the jet transverse diffusion coefficient, $\hat{q}$, as a continuous function of the medium temperature and parton momentum [4], and followed it up with the largest hard-sector global analysis to date, using a $\mathcal{O}(\rm{10M-core-hour})$ computing allocation awarded by the National Science Foundation [5].